Public Channels

- # discussions

- # keynote_tenenbaum

- # s1_lecun

- # s1_matsuo

- # s1_precup

- # s1_silver

- # s1_sugiyama

- # s2_fiete

- # s2_friston

- # s2_nagai

- # s2_sahani

- # s2_taniguchi

- # s3_botvinick

- # s3_kanai

- # s3_langdon

- # s3_nakahara

- # s3_wang

- # s4_dicarlo

- # s4_kamitani

- # s4_moran

- # s4_sejnowski

- # s4_takahashi

- # s5_churhland

- # s5_doya

- # s5_ema

- # s5_kitano

- # s5_russell

Private Channels

Direct Messages

Group Direct Messages

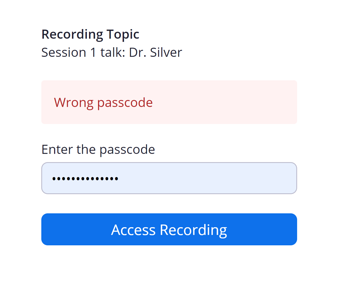

This is a discussion channel for “Self-Supervised Learning” by Dr. Yann LeCun (Facebook AI Research & New York University). A link to the talk is the following. Please do not share the link to anyone outside of this slack workspace. Access Pass code can be found at the announcement channel. URL: https://vimeo.com/470474729 (27 minutes)

Exciting talk, lots to think about. What surprised me most is that, on one of the last slides, you've put perception and models of the world into cleanly separate modules. Surely perception - beyond very early sensory stages - is very tightly interwoven with models of (parts of) the world?

*Thread Reply:* Yes. That said, the role of perception is to estimate the state of the world, while the role of the world model is to predict how this state of the world will evolve.

Great talk, very inspiring! Regarding “humans/animals learning very quickly”, it seems there are mechanisms into play that push our learning towards certain type of “behavior (data)“. Machines don’t have such mechanisms currently. Do you believe that the models/algorithms used in DL right now (EBM included) are enough to learn such behavior from limited interactions? Or do we need new ways of thinking about the problem? Thanks!

*Thread Reply:* There is such a bias in deep learning system, simply due to the architecture of the network. But I agree that humans and animals have hardwired "seeds" of behavior that drive the learning of particular concepts and drives behavior towards certain types. I would surmise that most of these biases are encoded through "objective functions" rather than through hardwired behaviors.

*Thread Reply:* The architectural biases are strongest in the periphery. The retina, for example, is highly differentiated and stereotyped with over 100 unique cell types. The thalamus has fewer cell types, but is also highly stereotyped. These stereotyped circuits are replicated across the visual field, which is the bias toward a convolutional architecture. The farther you go up the hierarchy, the larger the receptive fields and the stronger the impact of learning on response properties.

*Thread Reply:* Thanks @Terry Sejnowski! I have been wondering whether the “architecture/loss biases” would be enough for “learning quickly”, and it is likely that minor fixes to learning algorithms would speed things up for DL models. Thanks to both of you for your answers!

A closely related question regarding the fascinating issue of “humans/animals learning very quickly” - it could be argued that species development in the course of biological evolution incorporates in the structure/wiring of the brain already some "evolutionary" prior about our world/animal motorics (like baby giraffes walking within hours of birth). What is your opinion about what should be the balance between specificity (built in priors or "meta-priors") and generality of future ML algorithms? Is this related to your final comments that there is no General AI but rather Rat-AI, Cat-AI or Human-AI?

*Thread Reply:* For a given brain capacity, the more innate structure/behavior there is, the less adaptivity there is. It's a trade-off. More innate structure allows to learn faster, but is less robust in the face of changing environments that require learning and adpatation.

Intelligence is always specialized because there is no possibility of learning efficiently from a blank slate (there are theorems about this in learning theory called "no free lunch theorems").

Much of the limits of human intelligence are due to the physical constraints of the connection patterns in the brain.

Interesting talk. Thank you. In the case of humans, parents of human children would go crazy over the idea of self-supervised-learning for their children, and learning about the world model and common sense from videos (TV/YouTube). Trying to ‘automate’ the process with self-supervised-learning may be an efficient way, however humans (and common sense) are ‘nurtured’. In the end, will we need to ‘nurture’ these systems, especially for common sense and ethics?

*Thread Reply:* What humans learn from parents and others builds on top of an enormous volume of basic knowledge. We take this basic knowledge for granted, but we do learn it in the first few days, weeks, and months of life. I'm talking about things like: the world is 3-dimensional, there are objects, the world obeys intuitive physics.... Other "smart" animals learn most of this with no help. For example, octopuses are quite smart but only live a couple of years and never meet their parents.

Thank you very much for the great talk! Could you possibly clarify on the general idea of RLV autoencoder architecture? As I understand, latent variable is designed to be regularized in order not to gather the information from the input-related prediction "h". Why is that important to separate the previous-experience-related predictions from the latent variable representations? From the perspective of how the brain could process predictions, it seems to be more reasonable fusing these both into a common internal model. Could you emphasize (again?) what is special about this separation in the scope of network behavior and argue if that kind of separation should also be algorithmically plausible for the brain?

*Thread Reply:* Any time the desired output of a system is used (in any way) to compute a latent variable that helps with the prediction of said desired output, then the information capacity of this latent variable must be minimized, for example through a regularization term. Otherwise the system will cheat. An unregularized auto-encoder will learn the identity function, which is useless in the sense that it does not make the system capture the dependencies between the variables. In a prediction context, the role of the latent variable is to capture whatever complementary information about the desired output cannot be extracted from the observed input. Again, its information capacity needs to be minimized, otherwise the system will happily ignore the observed input and put all the necessary information in the latent variable.

A very naive question. Are you saying that human babies quickly learn the world by machine-like self-supervised learning that tries to predict masked portion of memorized scene sequence (while they are in sleep)?

*Thread Reply:* Not really. The statement is that baby humans and many animals seem to learn the vast majority of their knowledge through observation, with very little interactions or supervision. My hypothesis (and it is only a hypothesis) is that the way to capture dependencies between observations and predictions (which may be temporarily unavailable parts of the input) could be a good way to think about this paradigm of learning. I don't know how this could be implemented in the brain, and I only have ideas that are not entirely satisfactory for how to implement this in artificial systems.

Thank you for your great talk. Brain inspired architectures have significantly advanced subfields of AI (i.e.CNNs for computer vision, recurrent networks for language). Is there a brain inspired model architecture advancing RL to a similar extent (and if not what are properties such an RL specific architecture should possess)?

*Thread Reply:* RL, like supervised learning and self-supervised learning, is a paradigm that is somewhat independent of the underlying architecture. So, I'm not sure I can answer your question!

Thank you so much for the great talk! With respect to the development of neural networks from now on, do you think the progress should be computation architecture designing which integrates multiple fields e.g. dynamic systems, neuron science or it should be brain reverse-engineering starting with the design of neuron circuits for computing.

*Thread Reply:* In my view, the problem we face is a conceptual one: how could machines learn how the world works by observation? Could machine learn world models by watching videos? This is a problem of prediction under uncertainty, and we do not know how to efficiently represent a set of possible predictions in a high-dimensional continuous space (traditional probabilistic approaches have failed us for this).

The details of how the brain does this don't seem particularly relevant to me. It's too easy to focus on the details what neurons do and to miss the general principle on which learning in the brain is based. We will not make progress by designing electronic neurons until we know what they should do.

*Thread Reply:* Thank you for taking the time to respond! I’m still very much interested in this space and will certainly continue to follow it and your work.

*Thread Reply:* You can find the passcode in the announcement channel.