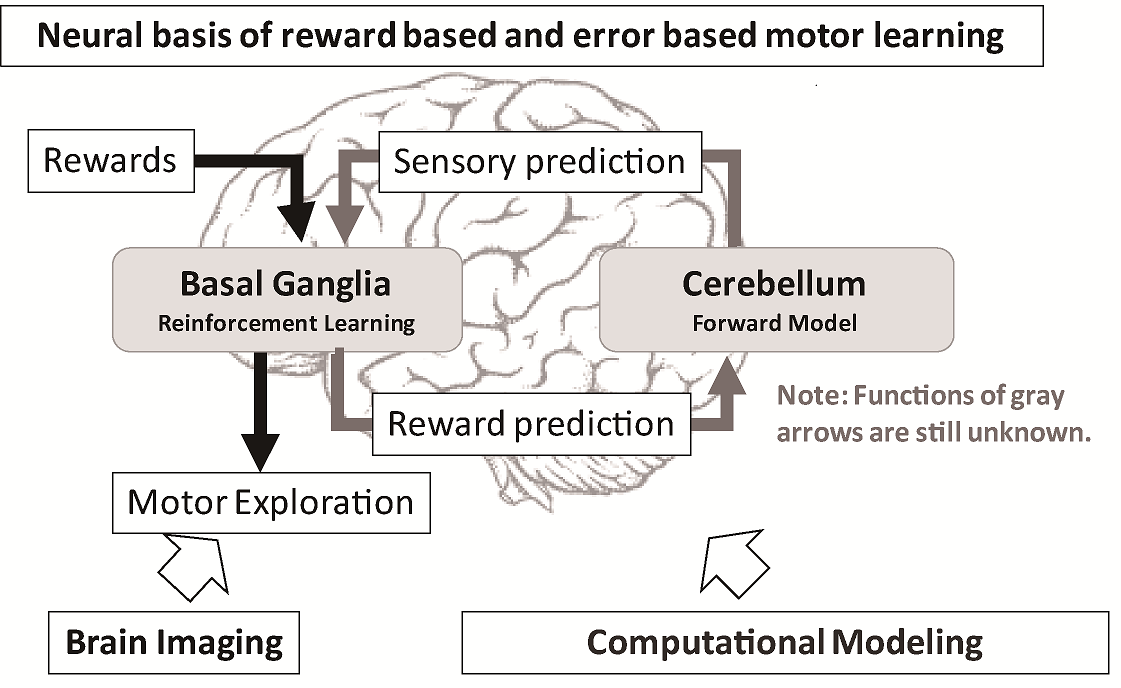

A02Computational understanding of the neural basis of reward-based and sensory-based motor learning

Using a motor learning architecture of robots as a normative model is effective to understand a computational nature of human motor learning in the brain. Also, studying human motor control gives a hint for us to develop a novel architecture of adaptive controller of robots. In this sense, human reinforcement learning during motor control is an important topic of the brain science as is shown by the fact that reinforcement learning was originally developed as a neuron-like adaptive robot controller. However, a role of reinforcement learning algorithm in human motor learning has attracted less attention than decision-making problem and sequential motor planning tasks. Here, we focus on exploration noise during learning of reach direction and study neural correlates of reinforcement learning and internal forward model via a computational model based analysis of functional MRI. Specifically, we aim to clarify both a computational role of internal model for reinforcement learning and that of reinforcement learning for internal model as well as an interaction of these two. In the end, we expect to suggest roles of reinforcement learning and internal model even in the social aspect of cognitive function via a collaboration in this research area.